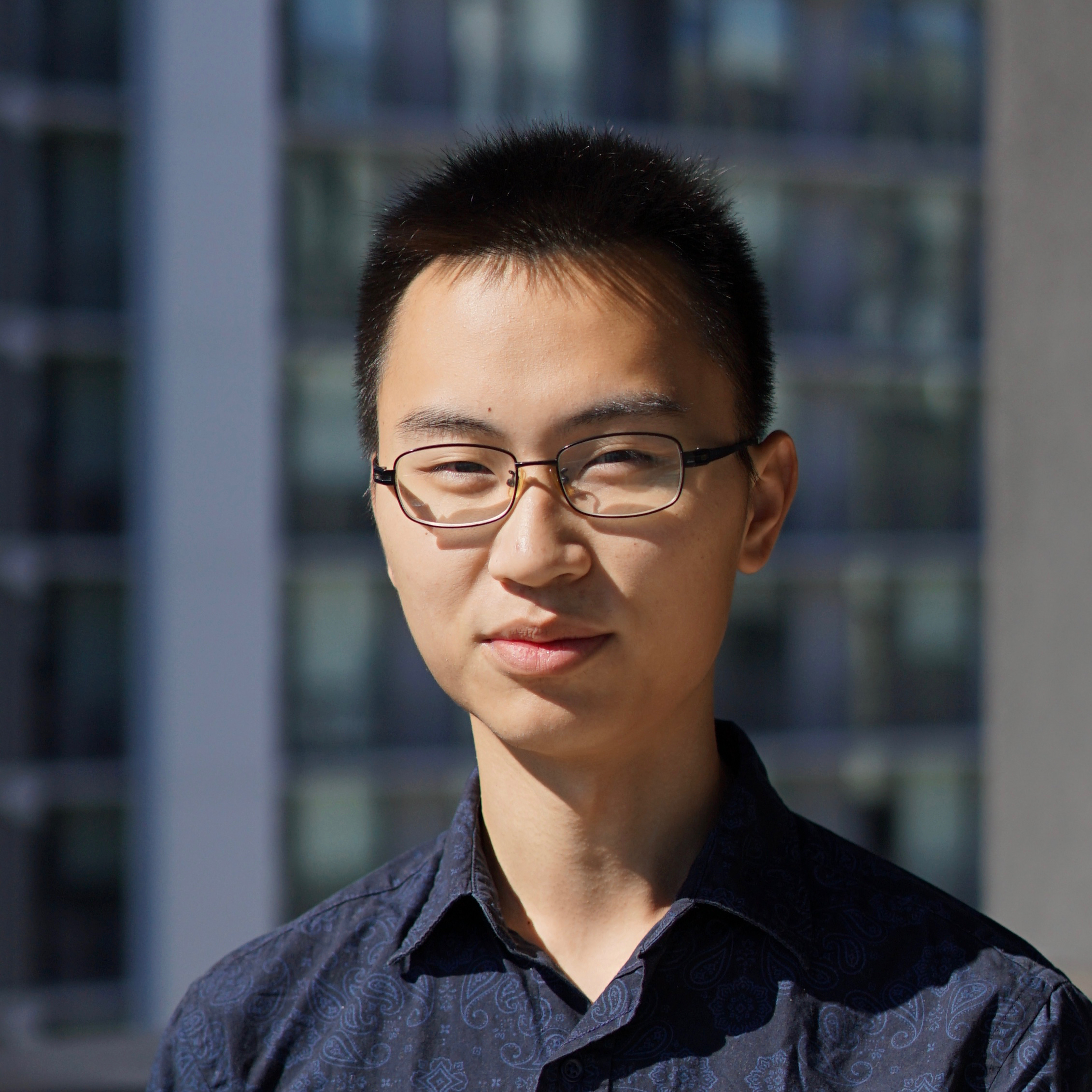

| Xiaochuang Han |  |

(You can call me Han, which is easier to pronounce and remember)

firstname.lastname@gmail.com

[CV] [Google Scholar]

Bio

I'm a PhD student in Computer Science and Engineering at the University of Washington, advised by Yulia Tsvetkov. I'm generally interested in natural language processing and multimodal generation. I have worked on topics like codec-based multimodal generation, diffusion language models, inference-time model collaboration, training data attribution, etc. Before UW, I was a Master of Language Technologies student at Carnegie Mellon University. Before CMU, I was an undergrad at Georgia Tech, advised by Jacob Eisenstein. I have been supported by OpenAI Superalignment Fellowship (2024) and Meta AI Mentorship Program (2023, 2022).

Selected Publications

JPEG-LM: LLMs as Image Generators with Canonical Codec Representations

, , , and .

arXiv preprint

David helps Goliath: Inference-Time Collaboration Between Small Specialized and Large General Diffusion LMs

, , , and .

NAACL 2024

Tuning Language Models by Proxy

, , , , , .

COLM 2024

Trusting Your Evidence: Hallucinate Less with Context-aware Decoding

, , , , , and .

NAACL 2024

In-Context Alignment: Chat with Vanilla Language Models Before Fine-Tuning

.

arXiv preprint

SSD-LM: Semi-autoregressive Simplex-based Diffusion Language Model for Text Generation and Modular Control

, , and .

ACL 2023

Understanding In-Context Learning via Supportive Pretraining Data

, , , , , and .

ACL 2023

Toward Human Readable Prompt Tuning: Kubrick's The Shining is a good movie, and a good prompt too?

, , , , , and .

Findings of EMNLP 2023

ORCA: Interpreting Prompted Language Models via Locating Supporting Data Evidence in the Ocean of Pretraining Data

and .

arXiv preprint

Influence Tuning: Demoting Spurious Correlations via Instance Attribution and Instance-Driven Updates

and .

Findings of EMNLP 2021

Fortifying Toxic Speech Detectors Against Veiled Toxicity

and .

EMNLP 2020

Explaining Black Box Predictions and Unveiling Data Artifacts through Influence Functions

, , and .

ACL 2020

Unsupervised Domain Adaptation of Contextualized Embeddings for Sequence Labeling

and .

EMNLP 2019

No Permanent Friends or Enemies: Tracking Dynamic Relationships between Nations from News

, , and .

NAACL 2019

Mind Your POV: Convergence of Articles and Editors Towards Wikipedia's Neutrality Norm

, , and .

CSCW 2018